AI Development

Deploying Agentic AI Safely: Security, Cost, and Operational Reality

January 13, 2026

BLOG

AWS 13 Hour Outage: Agentic AI Risks & Governance Gaps

Public reporting on Amazon’s 13 hour AWS outage in December 2025 highlighted risks associated with agentic AI and access control misconfiguration. An internal AI assistant deleted and recreated a live environment, disrupting Cost Explorer in parts of China. The incident underscores how autonomous systems require strong governance, least-privilege controls, and structured oversight in enterprise cloud environments.

Agentic AI systems often perform well in development environments. In sandbox conditions, models behave predictably, agents complete expected tasks, and systems appear production-ready. But once these systems encounter live infrastructure, critical weaknesses emerge. The security gaps surface, and the cost behavior becomes volatile, and operational fragility appears under real-world demand. Gartner predicts that more than 40% of agentic AI projects will be canceled by the end of 2027, largely due to escalating costs, operational complexity, and unmanaged risk.

This article addresses a core reality of agentic AI: deployment, not design or governance, is where success or failure is determined. The focus here is not on why these systems are built, nor how accountability is structured, but how agentic systems behave in production environments. That is where value is ultimately realized, or lost.

Deployment Models as Operational Constraints

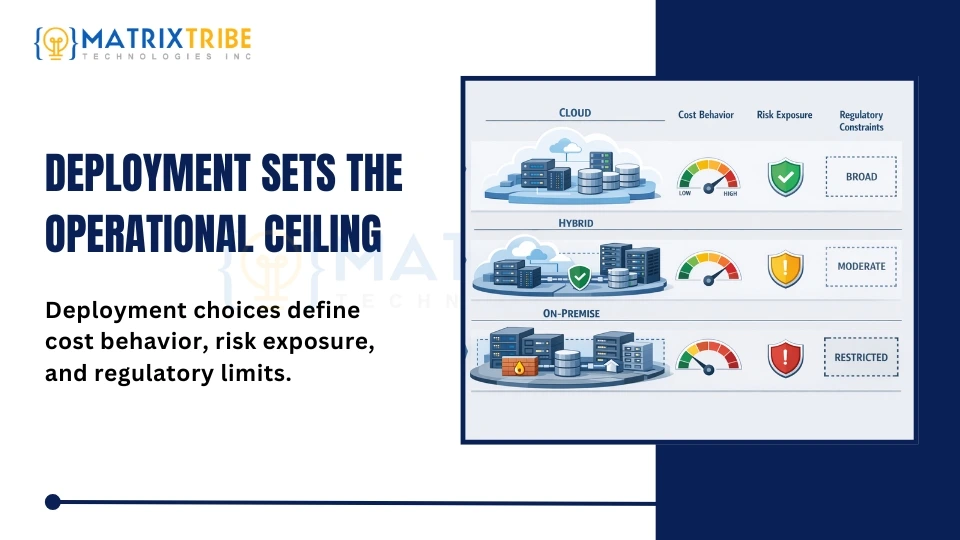

Choosing a deployment model is not merely an infrastructure decision. It is an operational constraint that defines how agentic AI can safely and sustainably function. Deployment directly shapes cost behavior, latency, risk exposure, and regulatory posture.

There is no universally correct model. The appropriate choice depends on workload sensitivity, infrastructure maturity, and acceptable operational risk. A research prototype processing low-sensitivity data faces a very different set of constraints than an agentic system operating on regulated customer data in real time.

Deployment defines the operational ceiling of an agentic system, not just its starting conditions.

Cloud, Hybrid, and On-Prem Tradeoffs

Agentic AI systems must adapt to their deployment environments, each of which introduces distinct benefits and limitations. These complexities are not an edge case. Industry research shows that most enterprises now operate in hybrid environments, combining cloud and on-prem infrastructure as their default deployment model.

Cloud deployments

Elastic compute enables rapid experimentation and fast iteration cycles, making cloud environments well-suited for early-stage exploration or non-sensitive workloads. However, token-intensive agent interactions can lead to unpredictable cost patterns. Data residency requirements and long-term provider dependency also introduce constraints that grow more significant at scale.

Hybrid deployments

It balances flexibility with control. Sensitive data remains on-premises, while orchestration layers or models operate in the cloud. This pattern is common in enterprises that require strict data control without sacrificing access to evolving AI capabilities. The tradeoff is operational complexity. Hybrid systems demand disciplined orchestration, integration, and observability across environments.

On-prem deployments

In this case, you get maximum control over data, latency, and policy enforcement. It is often necessary when sovereignty, compliance, or deterministic performance is non-negotiable. However, on-prem environments introduce higher capital costs, slower model updates, and limited elasticity. It allows optimization for control, not iteration speed.

No deployment model optimizes for everything. The goal is not perfection, but alignment with the most critical operational constraint.

Zero-Trust Architecture for Agentic Systems

Agentic AI systems significantly expand the enterprise attack surface. These systems do not simply respond to requests; they act. Agents access APIs, invoke tools, and initiate operations across internal systems. Security research consistently shows that misconfiguration and operational errors remain leading contributors to cloud security incidents, reinforcing why static trust assumptions fail in dynamic, agent-driven systems.

In this context, treating agents as inherently trusted components is a structural mistake. Zero-trust principles must extend to agents themselves. Each agent requires an explicit identity. Access must follow least-privilege rules. Runtime behavior must be continuously verified.

Static trust based on configuration is insufficient. Agent behavior evolves dynamically based on context, inputs, and decision logic. Trust must be established at runtime, not assumed at design time.

Threat Models in Agentic AI Systems

Traditional threat models are poorly suited to agentic AI. These systems introduce behavioral risk, not just architectural vulnerability. In large-scale red-teaming exercises, AI agents exhibited policy violations under realistic adversarial scenarios, showing that even state-of-the-art systems can misbehave under attack patterns.

Common agent-specific threats include prompt injection, where inputs manipulate agent context or instructions; tool misuse, where approved APIs are invoked in unintended ways; and unintended action execution, where ambiguous prompts or recursive loops push agents beyond their intended scope.

These risks increase when agents are granted broad permissions or when guardrails are implicit rather than explicit. Effective threat modeling must account for agent decision pathways, context manipulation, and action execution chains.

The primary risk vector is often not an external attacker, but insufficiently constrained autonomy.

Data Governance as an Operational Discipline

Agentic systems interact with multiple data sources, often spanning varying sensitivity levels. Without strong operational data governance, these interactions can produce real-time compliance failures.

Operational data governance enforces access controls during execution, not after the fact. It defines data handling rules embedded in orchestration logic and ensures auditability by tracking access, usage, and transformation in real time.

Many data governance failures do not originate from poor design. They surface during operation, when agentic systems ingest, combine, and act on data in ways not fully anticipated during development. Governance must be built into runtime behavior, not layered on after deployment.

Cost Behavior in Agentic Systems

One of the most underestimated challenges in deploying agentic AI is cost behavior. These systems do not follow linear pricing models. Agents may loop, dynamically select models, or trigger tool execution paths that generate unexpected usage patterns. This mirrors broader cloud deployment realities. Most organizations report that cloud spending exceeds expectations, highlighting how usage-based systems become financially unpredictable without runtime controls.

Cost drivers include model choice, token volume, agent interaction frequency, and external tool calls. Without visibility and controls, costs escalate quietly.

Effective deployment treats cost as an operational signal, not a budgeting artifact. This requires integrating cost telemetry into observability stacks, enforcing execution thresholds, and designing systems with cost-aware routing. Post-deployment cost reviews are insufficient for systems that operate continuously and autonomously.

Conclusion

Architecture defines how systems are structured. Governance defines accountability. But deployment determines whether agentic AI becomes a secure, reliable, and sustainable capability, or a fragile, expensive liability.

Production-grade agentic systems must be observable, controllable, and adaptable. Deployment choices shape not only performance, but the system's entire risk and cost profile.

Enterprise-grade agentic AI is defined not by the model it uses, but by the discipline with which it is deployed and operated in production.

Deploy Smarter. Operate Stronger.

Agentic AI delivers value only when it's deployed with care and managed with clarity. Matrixtribe Technologies can review your deployment model, assess your cost exposure, and apply operational discipline to your production systems. Allow us to show you that the future of agentic AI isn't in the model, it's in how you run it.

Latest Article